AI connectors: use cases, benefits, and features

As AI companies look to help business customers get the most out of the large language models (LLMs) they use, they’ll need to support integrations with their customers’ business applications.

We’ll break down why that is and how AI connectors can be the most effective means of supporting these integrations. But to start, let’s align on the definition of an AI connector.

What is an AI connector?

It’s a 3rd-party tool that allows a company to support integrations between an LLM and any number of business applications, such as file storage, ticketing, HRIS, or accounting solutions.

Related: What are agent connectors?

AI connector use cases

Here are just a few ways that AI companies can leverage these connectors.

Answering employees' questions

Say your company offers an enterprise search solution that allows users to ask questions related to their work or employer.

By integrating your solution with the customers’ CRMs, file storage platforms, HRISs, and other systems of record, the LLM your solution uses can access the information needed to generate accurate outputs for customers, quickly.

For example, an account manager can use your solution to ask questions like where the team onsite will take place, the health of one of their accounts, and the criteria for escalating issues. The user can not only get answers in plain text for each of these questions but also find the sources that include the answers, allowing them to drill down further.

Related: How AI enterprise AI search

Recommending high-fit leads

Say you offer a sales automation solution that recommends leads to users.

To help your solution continually refine its recommendations, you can integrate with customers’ CRMs and feed a wide range of customer data to the LLM you use. This helps the LLM both determine your customers’ high fit leads and learn from its recommendations—as it can see how far and how quickly the recommended leads move through the sales cycle.

The integration can also work bidirectionally; in other words, users can, with the click of a button in your platform, add a recommended lead to their CRM.

Sourcing ideal candidates

Imagine you offer a recruiting automation solution that uses an LLM to provide high-fit candidate recommendations.

To ensure your LLM continually recommends strong candidates, you can integrate with customers’ ATS solutions and continually ingest data related to the candidates marked as hired. You can also feed the ATS data from the recommended candidates back to your LLM to help it learn from and improve its recommendations.

Finally, similar to our previous example, the integration can work bi-directionally: The user can add any recommended candidate to their ATS with the click of a button.

Benefits of AI connectors

Here are just a few of the benefits that come with using AI connectors.

Saves developer resources

Forcing your developers to build and maintain each integration would require countless hours of their time. This is especially true for AI-based integrations, as you likely want to connect the LLM you use with several integrations across categories.

Since an AI connector takes a lot of this work off of your developers' plates, they're able to save countless time and focus on the projects they’re uniquely equipped to perform and likely enjoy more (e.g., building out key features in your core product).

Accelerates your integrations’ time to market

Building a single integration with an LLM can also take your team several months. Multiply this by the number of integrations you want to build and you’ll likely face a timeline that stretches for years.

Using a unified API solution as your AI connector can serve as a force multiplier in your time to build integrations, as you’d be able to add several integrations with a single build.

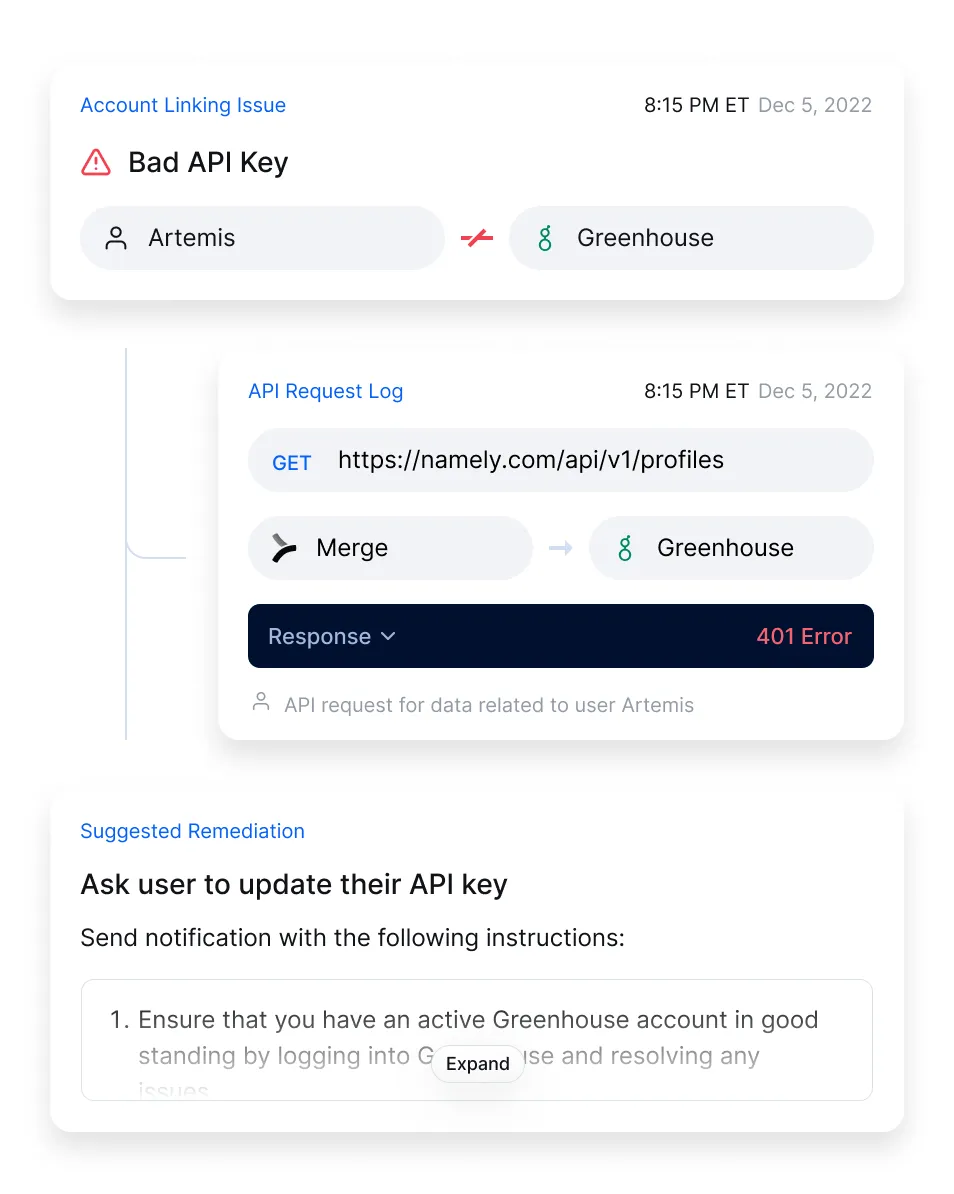

Helps you debug issues quickly

Integration issues are bound to crop up over time, and many may lead the LLM you use to stop receiving invaluable data.

To prevent this from happening, or at least minimize disruptions, the AI connector can offer out-of-the-box features that let you detect, diagnose, and resolve issues.

https://www.merge.dev/blog/api-integration-benefits?blog-related=image

How AI connectors work

While AI connectors can vary, depending on the solutions you evaluate, they generally offer certain functionality and features:

- API-based connectivity: They allow you to build API-based integrations between business applications and LLMs, which allow for reliable, secure, and fast sync frequencies

- Internal and/or customer-facing support: They can support internal integrations (i.e., between an LLM used internally and other applications within your organization) or customer-facing integrations (i.e., between the LLM your product uses and your customers’ applications)

- Cross-category integration support: Since LLMs typically need a diverse set and high volume of customer data, AI connectors often support several categories of integrations, including CRM, HRIS, file storage, ticketing, ERPs, and ATSs

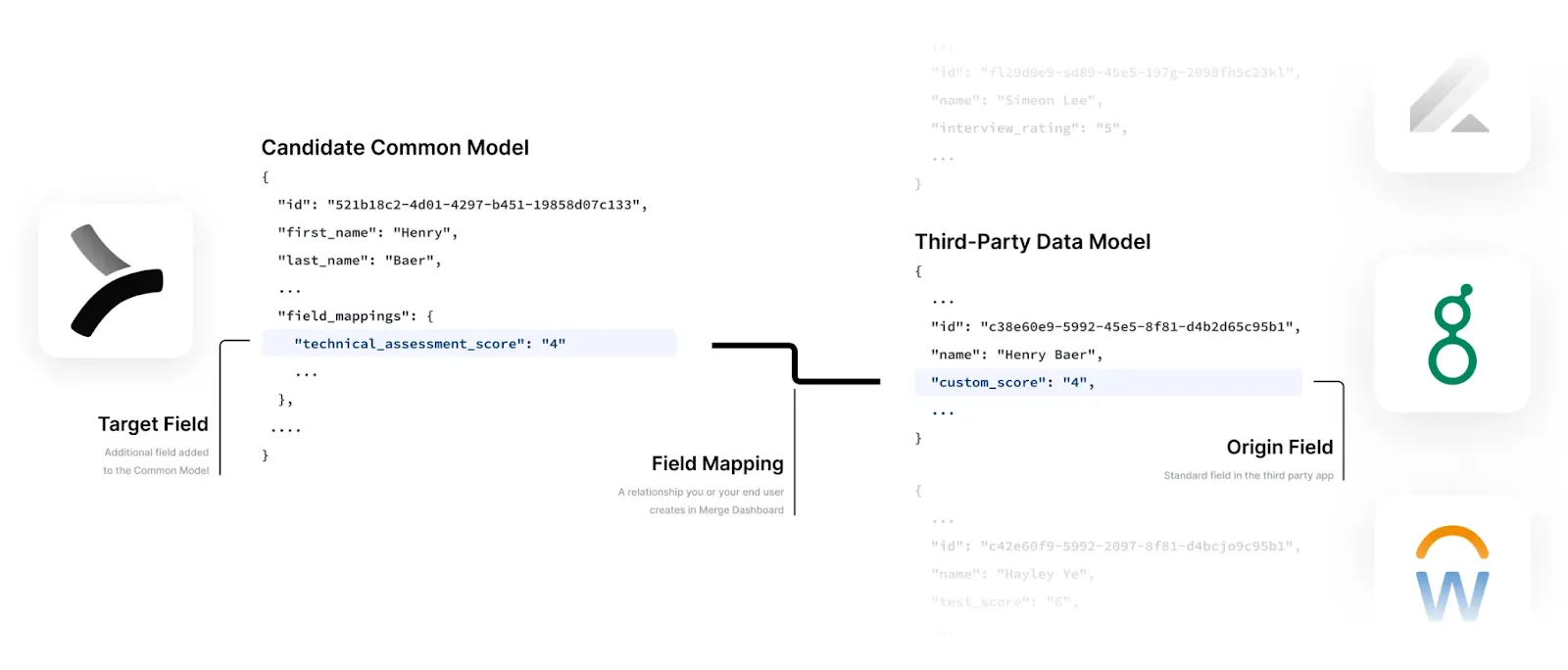

- Advanced syncing capabilities: They may be able to access and sync custom objects and fields, which allows the LLM you use to provide more refined and helpful outputs

- Normalized data: The AI connector may consistently provide data in a certain normalized schema, making it easy for the LLM you use to understand the data quickly and correctly and use it effectively

- Enterprise-grade security: They typically encrypt data at rest and in transit, provide role-based access controls, track user activities through logs, and more to help you keep data secure

{{this-blog-only-cta}}

.jpg)

.png)