Table of contents

How to get your Gemini API key (5 steps)

Connecting Gemini—Google’s LLM and formerly known as Bard—with your internal applications and/or product can fundamentally change how your employees interact with your applications and/or how customers use your product.

But before you can access one of Gemini’s models via API requests, you’ll need to generate an API key within the Google AI Studio.

We’ll help you do just that by walking through each of the 5 steps you’ll need to take.

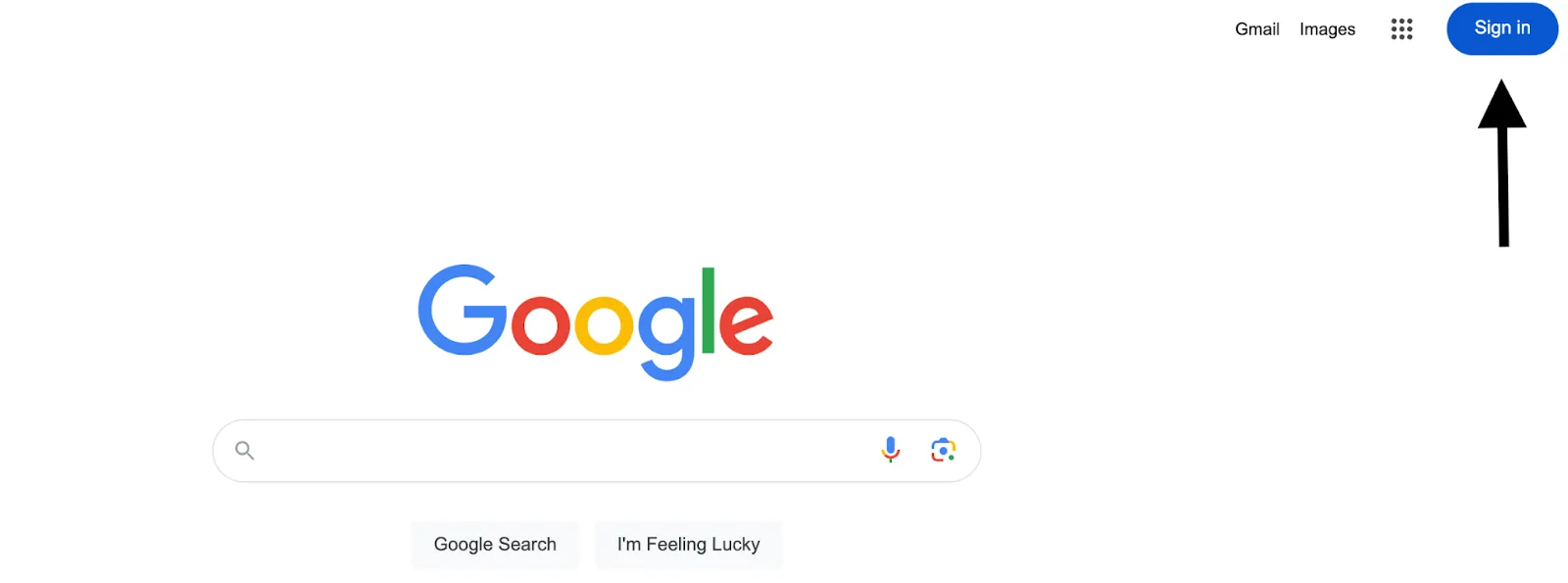

Step 1: Login to your Google account

You should see the sign in button on the top right corner of Google’s home page.

{{this-blog-only-cta}}

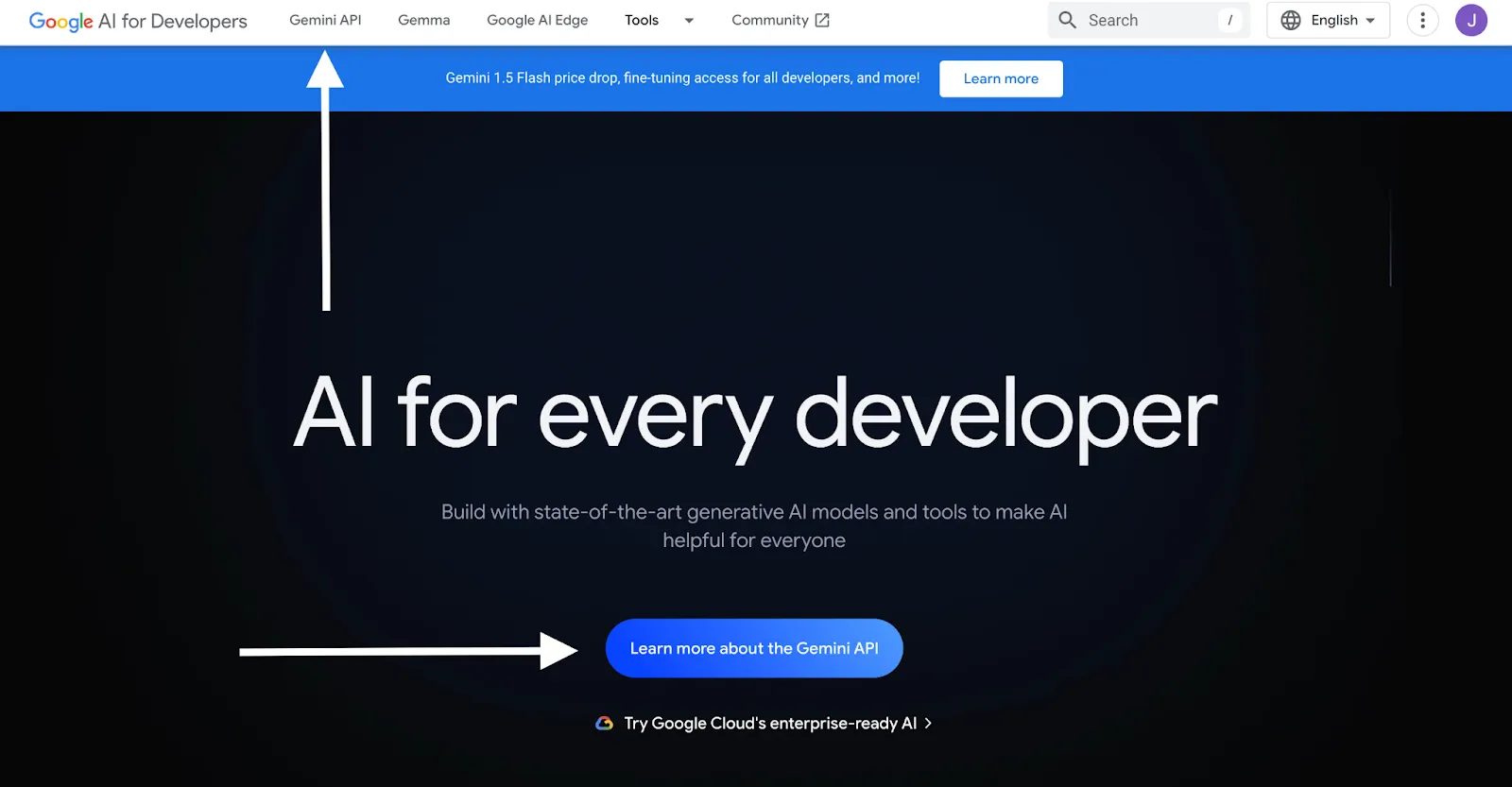

Step 2: Visit the “Google AI Studio”

You can find this landing page here. You'd then need to click on the “Gemini API” tab or click on the “Learn more about the Gemini API” button.

Alternatively, you can visit the Gemini API landing page directly.

Related: How to get your API key from Llama

Step 3: Click on “Get API key in Google AI Studio”

This should appear as a button on the center of the page.

Step 4: Review and approve the terms of service

You should then see a pop-up that asks you to consent to Google APIs Terms of Service and Gemini API Additional Terms of Service.

While not required, you can also opt in to receive emails that keep you up to date on Google AI and ask you to participate in specific research studies for Google AI.

Go ahead and check off the first box (and the others if you’d like) and click Continue.

Related: What you need to do to get your Grok API key

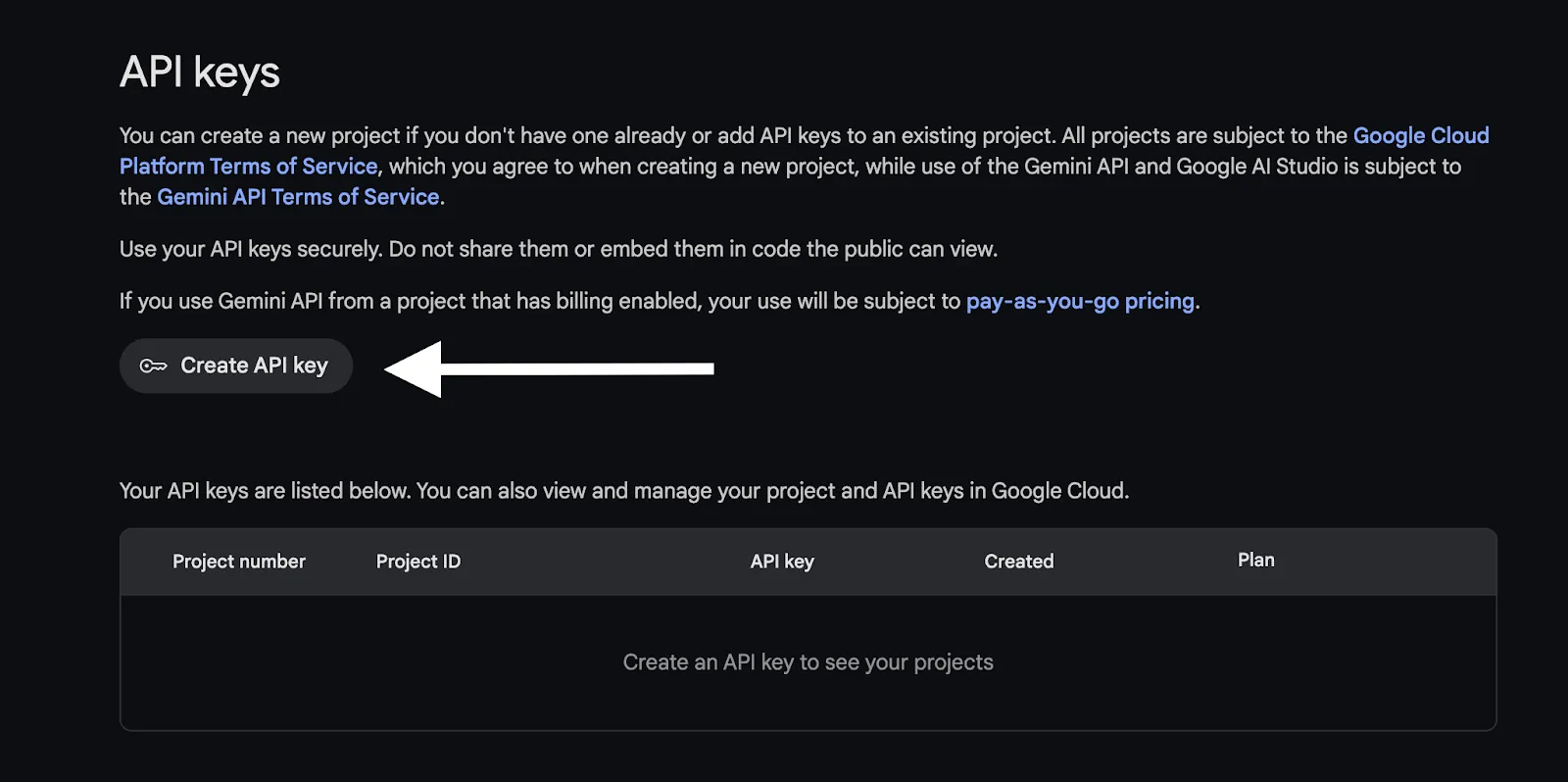

Step 5: Create your API key

You can now click “Create API key.”

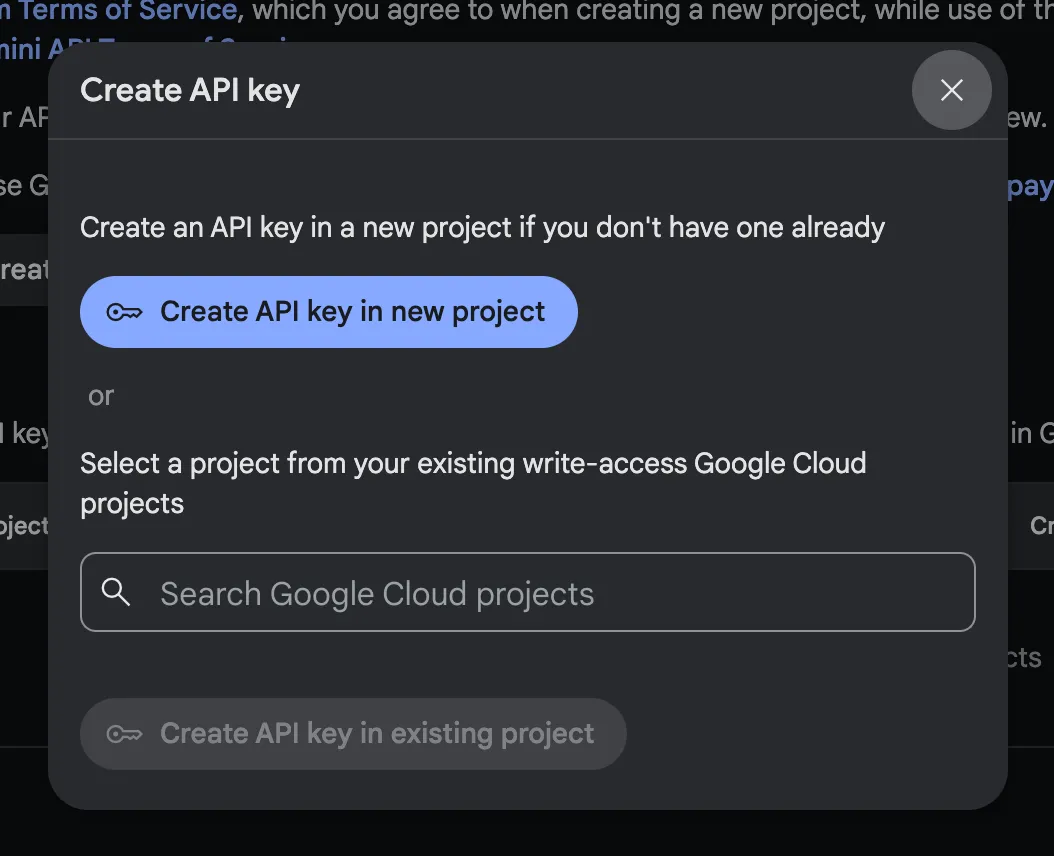

You’ll then have the option to create an API key in a new project or via an existing project.

Once you’ve chosen one of these options, your API key should be auto-generated!

Remember to store this API key in a secure location to prevent unauthorized access.

Related: A guide to getting your API key in Mistral AI

Other considerations for building to Gemini’s API

Before building to Gemini’s API, you should also look into and understand the following areas:

Pricing plans

The pricing plans vary between Gemini 1.5 Flash, Gemini 1.5 Pro, and Gemini 1.0 Pro. However, they all come with a free tier and a pay-as-you-go tier.

The main differences between the two tiers across these models are the rate limits, the pricing on inputs and outputs, whether context caching is provided, and whether the inputs and outputs are used to improve their products.

You can learn more about Gemini’s pricing plans here.

Rate limits

As mentioned previously, the rate limits differ across models and plans. The rate limits are also measured in several ways for each model and plan. More specifically, they’re measured by requests per minute, tokens per minute, and requests per day.

Learn more about Gemini’s rate limits here.

Errors to look out for

While you may experience a wide range of errors, here are a few common ones to be aware of:

- 400 INVALID_ARGUMENT: The request body has a typo, missing field(s), or another issue that’s leading the request to fail

- 404 NOT_FOUND: your requested resource wasn’t found in the server

- 403 PERMISSION_DENIED: your API key doesn’t have the appropriate level of permissions to access the model; and/or you’re trying to access a tuned model without going through the proper authentication process

- 500 INTERNAL: the issue lies on Gemini’s end. You can try making the same request to another model or simply wait and retry the request

Learn more about the API errors you might encounter with Gemini here.

SDKs

To help you build integrations faster and with fewer issues, you can use any one of Gemini API’s SDKs.

Their SDKs cover a wide range of languages, which include Python, Node.js, Go, Dart, Android, Swift, Web, and REST.

You can learn more about the prerequisites of using each SDK, get instructions for installing any, and more here.

Capabilities available

Using the Gemini API, you can access a wide range of the LLM’s capabilities.

Here’s just a snapshot of what you can do:

- Text generation: Through your inputs, you can get outputs that summarize text, describe visual assets, translate text into a different language, create copy in specific formats (e.g., blog post) and in a certain tone and voice

- Vision: You can use an image or video as the input and get summaries of or specific questions answered about that video or image.

- Audio: Using an audio recording as the input, you can get a summary of the audio file, receive answers to certain questions, or receive a transcription (you can define the transcription’s length and sections that you'd like)

- Long context: Using a Gemini model’s large context window (e.g., 2 million tokens with Gemini 1.5 Pro), you can use long-form text, videos, and audio recordings in your inputs and get answers to specific questions, receive summaries, edit videos in certain ways (e.g., add captions), and more

Safety filters

While the Gemini API prevents its output from “core harms”, you can adjust certain safety filters—harassment, hate speech, sexually explicit, and dangerous—in a given request such that the outputs better fit your needs.

You’ll receive the category scores in the API response (low, medium, and high) and, based on these scores, the content may or may not get blocked.

You can learn more about using safety filtering with the Gemini API here.

Final thoughts

Your product integration requirements likely extend far beyond Gemini.

If you need to integrate your product with hundreds of 3rd-party applications that fall under popular software categories, like CRM, HRIS, ATS, or accounting, you can simply connect to Merge’s Unified API.

Merge also provides comprehensive integration maintenance support and management tooling for your customer-facing teams—all but ensuring that your integrations perform at a high level over time.

You can learn more about Merge by scheduling a demo with one of our integration experts.

.png)

.png)